Neo-Nazi groups have been permitted to remain on Facebook because they do not violate the social media platform’s “community standards”.

A Counter Extremism Project report, seen by the Independent, revealed that pages operated by international white supremacist organisations – including the far right Combat 18, the Misanthropic Division and the British Movement – were not removed by Facebook.

Instead, administrators told researchers from the project to “unfollow” pages that they found offensive.

When neo-Nazi groups that clearly violate the platform’s policies are allowed to maintain pages, it raises serious questions regarding Facebook’s commitment to their own policies.

Of the pages reported, several included racist and homophobic statements. One post described non-white citizens as “vermin” while another labeled members of the LGBTQ+ community “degenerates”.

Images of Nazi leader Adolf Hitler and swastikas also featured heavily, as was Nazi merchandise, the profits of which were used to fund supremacist activity.

“When neo-Nazi groups that clearly violate the platform’s policies are allowed to maintain pages, it raises serious questions regarding Facebook’s commitment to their own policies,” the CEP’s report concluded.

How Did Facebook Attempt To Justify The Report?

According to Facebook’s community standards, hate speech is defined in much the same way as it is in British law: a discriminatory and “direct attack” on an individual based on a number of characteristics that are protected under the Equality Act 2010.

These characteristics include race, religious beliefs and sexual orientation.

A spokesperson for Facebook told the Independent: “We want Facebook to be a safe place and we will continue to invest in keeping harm, terrorism, and hate speech off the platform.

Image credit: Facebook has 2.32 billion users/Pexels

“Our community standards ban dangerous individuals and organisations, and we have taken down the content that violates this policy. Every day we are working to keep our community safe, and our team is always developing and reviewing our policies.

“We’re also creating a new independent body for people to appeal content decisions and working with governments on regulation in areas where it doesn’t make sense for a private company to set the rules on its own.”

Facebook figures seem to show some effort to remove “terrorist content”: around 2.5 million pieces of hate speech were deleted in the first quarter of 2018.

However, this fails to explain a seeming blind spot to white supremacist activity on the global network, which is currently used by 2.32 billion users every month.

Could Freedom Of Speech Be Used As An Explanation For Facebook’s Behaviour?

Under Article 10 of the Human Rights Act 2018, everyone has the right to freedom of expression and information. This includes the freedom to hold opinions and to receive and impart information and ideas “without interference by public authority and regardless of frontiers”.

However, this is subject to certain restrictions in “accordance with the law” that are “necessary in a democratic society”.

So Freedom Of Expression Doesn’t Mean We Can Say Whatever We Want With Impunity?

As the Act explains, this freedom comes with a number of responsibilities, including preventing crime, maintaining national security and protecting the rights of others.

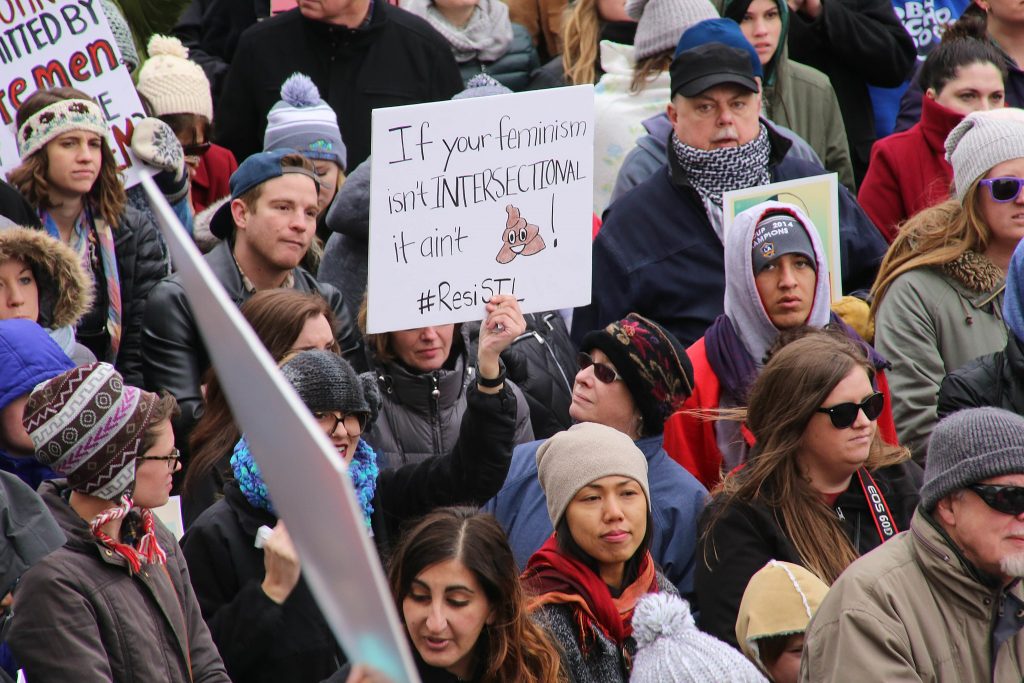

Image credit: Speech for inclusion/ Wikimedia

Offensive speech that targets specific groups of people with protected characteristics, for example, is a hate crime under the Public Order Act 1986. Other relevant pieces of legislation include the Criminal Justice and Immigration Act 2008 which criminalises inciting hatred based on sexual orientation.

It could be argued that these white supremacist Facebook groups – many of which promote the same racist ideology published in a manifesto by the Christchurch attacker, who shot 50 people at a mosque in New Zealand last week – are also a threat to national security in the UK.

Does This Mean Facebook Could Be In Trouble For Not Removing These Groups?

Potentially, yes. While the Human Rights Act doesn’t apply to Facebook, a lot of the same debates and questions still apply.

The social network is already under fire for not removing a live stream of the Christchurch terrorist shooting people in New Zealand before it was reported to police, and the MPs have signalled that strict new laws should be introduced.

The British government is currently preparing to publish a white paper on tackling “online harms”, which could well advise introducing legal regulations around the restriction of these pages.